We use Jenkins at our current project at 99X Technology as the continuous integration server. We also have a separate Jenkins instance running on a separate server which execute periodical batch processing jobs against the production data.

When a Jenkins job fails, Jenkins would send an automated email to dev email group. In practice those email notifications easily stay in developers mailboxes without getting noticed. Sometimes things remain failing for a big part of the day, specially not-so-actively developed products and batch processing jobs. So I wanted a way to let the team quickly recognize when something goes wrong.

Couple of years ago, one of my colleague built a similar Jenkins light. Then it was lots of electronic related work to get it up and running. The integration with Jenkins server was done using a Jenkins notification application called Hudson tray application. We faced many integration issues there, which caused Jenkins light to be not-so-stable.

Having IOT devices so common these days, we found a handy little wifi-enabled board (photon) from particle.io as a handy solution to wire into LEDs. One of big advantages of particle is that they provide a free API you can use to easily send a message to your photon without having to configure any infrastructure yourself. So all what we needed to do was to expose an API function from photon and to make an HTTP request from Jenkins to our api.particle.io endpoint with the desired state of the lights (green vs red).

Getting started with photon device was really easy. I just claimed my device through https://setup.particle.io/ and configured wifi settings, and the device was up-and-running within few seconds!

I am no expert on developing electronic circuits. So I started with blink an LED hello world app. Soon I realized it was the ideal point to start building Jenkins light. Particle’s web IDE https://build.particle.io/build is a handy dev environment and loading code into my photon device is super easy.

We used two LEDs connected to different pins of the photon device to build the pilot Jenkins lights using LEDs. I exposed a function from my device code to api.particle.io and then got Jenkins post build task plugin to send an HTTP request to particle api via a windows shell script. The shell script use CURL command line tool to check all Jenkins jobs if any is failed.

Have a look at the particle code and windows script we used https://gist.github.com/isurusndr/88b4b5ae6367fe73464523894183abc1

Here is the final result with two LEDs plugged into the photon board as a pilot project. It is just USB powered and really easily to move around. We’ve been testing our pilot Jenkins lights for few days now and I am really happy how quickly we were able to notice failures in Jenkins.

Since then we improved our pilot Jenkins light, adding LED matrix panels for better visibility. We also extended it to have two set of lights on the same photon; one for Jenkins doing CI and one for Jenkins handling batch processing of data.

Isuru Senadheera's Blog

A journal of my thoughts, adventures and experiences. #programming #software #photography #travel #leo

Tuesday, December 26, 2017

Thursday, October 19, 2017

Best Practices for API Error Handling

Error codes are almost the last thing that you want to see in an API response. Generally speaking, it means one of two things — something was so wrong in your request or your handling that the API simply couldn’t parse the passed data, or the API itself has so many problems that even the most well-formed request is going to fail. In either situation, traffic comes crashing to a halt, and the process of discovering the cause and solution begins.

That being said, errors, whether in code form or simple error response, are a bit like getting a shot — unpleasant, but incredibly useful. Error codes are probably the most useful diagnostic element in the API space, and this is surprising, given how little attention we often pay them.

Today, we’re going to talk about exactly why error responses and handling approaches are so useful and important. We’ll take a look at some common error code classifications the average user will encounter, as well as some examples of these codes in action. We’ll also talk a bit about what makes a “good” error code and what makes a “bad” error code, and how to ensure your error codes are up to snuff.

The Value of Error Codes

As we’ve already said, error codes are extremely useful. Error codes in the response stage of an API is the fundamental way in which a developer can communicate failure to a user. This stage, sitting after the initial request stage, is a direct communication between client and API. It’s often the first and most important step towards not only notifying the user of a failure, but jump-starting the error resolution process.

A user doesn’t choose when an error is generated, or what error it gets — error situations often arise in instances that, to the user, are entirely random and suspect. Error responses thus are the only truly constant, consistent communication the user can depend on when an error has occurred. Error codes have an implied value in the way that they both clarify the situation, and communicate the intended functionality.

Consider for instance an error code such as “401 Unauthorized – Please Pass Token.” In such a response, you understand the point of failure, specifically that the user is unauthorized. Additionally, however, you discover the intended functionality — the API requires a token, and that token must be passed as part of the request in order to gain authorization.

With a simple error code and resolution explanation, you’ve not only communicated the cause of the error, but the intended functionality and method to fix said error — that’s incredibly valuable, especially for the amount of data that is actually returned.

Conclusion

Much of an error code structure is stylistic. How you reference links, what error code you generate, and how to display those codes is subject to change from company to company. However, there has been headway to standardize these approaches; the IETF recently published RFC 7807, which outlines how to use a JSON object as way to model problem details within HTTP response. The idea is that by providing more specific machine-readable messages with an error response, the API clients can react to errors more effectively.

In general, the goal with error responses is to create a source of information to not only inform the user of a problem, but of the solution to that problem as well. Simply stating a problem does nothing to fix it – and the same is true of API failures.

The balance then is one of usability and brevity. Being able to fully describe the issue at hand and present a usable solution needs to be balanced with ease of readability and parsability. When that perfect balance is struck, something truly powerful happens.

While it might seem strange to wax philosophically about error codes, they are a truly powerful tool that go largely underutilized. Incorporating them in your code is not just a good thing for business and API developer experience – they can lead to more positive end user experience, driving continuous adoption and usage.

That being said, errors, whether in code form or simple error response, are a bit like getting a shot — unpleasant, but incredibly useful. Error codes are probably the most useful diagnostic element in the API space, and this is surprising, given how little attention we often pay them.

Today, we’re going to talk about exactly why error responses and handling approaches are so useful and important. We’ll take a look at some common error code classifications the average user will encounter, as well as some examples of these codes in action. We’ll also talk a bit about what makes a “good” error code and what makes a “bad” error code, and how to ensure your error codes are up to snuff.

The Value of Error Codes

As we’ve already said, error codes are extremely useful. Error codes in the response stage of an API is the fundamental way in which a developer can communicate failure to a user. This stage, sitting after the initial request stage, is a direct communication between client and API. It’s often the first and most important step towards not only notifying the user of a failure, but jump-starting the error resolution process.

A user doesn’t choose when an error is generated, or what error it gets — error situations often arise in instances that, to the user, are entirely random and suspect. Error responses thus are the only truly constant, consistent communication the user can depend on when an error has occurred. Error codes have an implied value in the way that they both clarify the situation, and communicate the intended functionality.

Consider for instance an error code such as “401 Unauthorized – Please Pass Token.” In such a response, you understand the point of failure, specifically that the user is unauthorized. Additionally, however, you discover the intended functionality — the API requires a token, and that token must be passed as part of the request in order to gain authorization.

With a simple error code and resolution explanation, you’ve not only communicated the cause of the error, but the intended functionality and method to fix said error — that’s incredibly valuable, especially for the amount of data that is actually returned.

Conclusion

Much of an error code structure is stylistic. How you reference links, what error code you generate, and how to display those codes is subject to change from company to company. However, there has been headway to standardize these approaches; the IETF recently published RFC 7807, which outlines how to use a JSON object as way to model problem details within HTTP response. The idea is that by providing more specific machine-readable messages with an error response, the API clients can react to errors more effectively.

In general, the goal with error responses is to create a source of information to not only inform the user of a problem, but of the solution to that problem as well. Simply stating a problem does nothing to fix it – and the same is true of API failures.

The balance then is one of usability and brevity. Being able to fully describe the issue at hand and present a usable solution needs to be balanced with ease of readability and parsability. When that perfect balance is struck, something truly powerful happens.

While it might seem strange to wax philosophically about error codes, they are a truly powerful tool that go largely underutilized. Incorporating them in your code is not just a good thing for business and API developer experience – they can lead to more positive end user experience, driving continuous adoption and usage.

Tuesday, October 18, 2016

Inserting Large Amount of Records into Database with SqlBulkCopy

Recently we came across an interesting situation analyzing performance hinge of a data importer implementation. The existing implementation of a data importer in the project i was working on, had individual sql insert queries for each data record to be inserted into a database table. Obviously it was a heavy operation to insert thousands of records into the database.

The good news is that there are some handy solutions exist to handle such situations with much more efficiency. Well, we mainly considered two approaches, to either use table valued parameters, and to use SQLBulkCopy class. Microsoft SQL Server also includes a popular command-line utility named bcp for quickly bulk copying large files into tables or views in SQL Server databases.

The SqlBulkCopy class can be used to write data only to SQL Server tables. But the data source is not limited to SQL Server; any data source can be used, as long as the data can be loaded to a DataTable instance or read with a IDataReader instance.

We had data records on a text file, written into an specific csv like format. We were using EntityFramework-like data access layer implementation, hence the solution can be generalized to use EntityFramework as well.

We had to implement file data reader class to read the file data into an IEnumerable.

//read the file and other logic

Since there were no existing implementation of IDataReader which use enumerator as a data source, we had to do our own implementation. A sample code can be found at my gist https://gist.github.com/isurusndr/450cfd0b2b19cd92ca322685d648877d

Calling of SqlBulkCopy is straight forward.

Keep in mind not to set lower values to BatchSize property of SqlBulkCopy. Higher the value of batch size, better the performance would be.

The performance improvement we got was fantastic. We could brought initial time of 8 minutes to insert a sample data set, to less than 30 seconds at the end.

The good news is that there are some handy solutions exist to handle such situations with much more efficiency. Well, we mainly considered two approaches, to either use table valued parameters, and to use SQLBulkCopy class. Microsoft SQL Server also includes a popular command-line utility named bcp for quickly bulk copying large files into tables or views in SQL Server databases.

The SqlBulkCopy class can be used to write data only to SQL Server tables. But the data source is not limited to SQL Server; any data source can be used, as long as the data can be loaded to a DataTable instance or read with a IDataReader instance.

We had data records on a text file, written into an specific csv like format. We were using EntityFramework-like data access layer implementation, hence the solution can be generalized to use EntityFramework as well.

We had to implement file data reader class to read the file data into an IEnumerable

private IEnumerable GetFileContents(String filePath)) {//read the file and other logic

foreach (var dataLine in dataLines){ //some other logic yeild return entityObject; }}Since there were no existing implementation of IDataReader which use enumerator as a data source, we had to do our own implementation. A sample code can be found at my gist https://gist.github.com/isurusndr/450cfd0b2b19cd92ca322685d648877d

Calling of SqlBulkCopy is straight forward.

public static void BulkCopy(IEnumerable items, string connectionString){ var bulkCopy = new SqlBulkCopy(connectionString); var reader = new EnumerableDataReader(items); foreach (var column in reader.ColumnMappingList) { bulkCopy.ColumnMappings.Add(column.Key, column.Value); } bulkCopy.BatchSize = 10000; bulkCopy.BulkCopyTimeout = 1800; bulkCopy.DestinationTableName = "EntityTable"; bulkCopy.WriteToServer(reader); bulkCopy.Close();}Keep in mind not to set lower values to BatchSize property of SqlBulkCopy. Higher the value of batch size, better the performance would be.

The performance improvement we got was fantastic. We could brought initial time of 8 minutes to insert a sample data set, to less than 30 seconds at the end.

Wednesday, August 31, 2016

Cheap Chinese Mini LED Projectors

Few months back, when I was looking for a projector for a reasonable price for presenting things in meetings, I found mini LED projectors at ebay quite interesting. There are quite a lots of models available for purchase at various price ranges.

These projectors are called simplified micro projectors (SMP). It is one kind of projector which has simplified system structure design, but with multi-function. These projectors usually uses LCD as the image system and LED as light source.

The most important thing I have to tell you is that do not keep high expectation if you decide to buy a cheap projector. It comes with less/basic features for the less price you pay.

Usually these projectors can not be used in environments with bright light. Cheap SMPs can not produce a larger picture, but you can get a decent 34 to 130 inches image in a fairly dark environment. The picture isn't too bright, so you can get the best output in a dark room. Hence think of your requirement before buying one. It would suit well for home cinema. For doing a presentation in a fairly dark room, well it will yet do a reasonable job. But beyond that, you will have to go for an expensive projector.

After doing few weeks of reading on internet, I finally ordered Unic UC40 model. My budget was around 80 USD, and UC40 was the best option to go as per some reviews. Alternatively GM 60 (another reference) and RD 805 seemed to be a good option too. Later I ordered RD805 for one of my friends. It was bit hard task to compare between models as there were not much reviews on internet on these projectors.

Most of SMPs produce 1000 lumens brightness and 1:1000 contrast ratio. They have 800x450 pixel resolution, well those specs are adequate for the price you paying.

I will bring a comparative review of UC40 and RD805 in my next post.

These projectors are called simplified micro projectors (SMP). It is one kind of projector which has simplified system structure design, but with multi-function. These projectors usually uses LCD as the image system and LED as light source.

The most important thing I have to tell you is that do not keep high expectation if you decide to buy a cheap projector. It comes with less/basic features for the less price you pay.

Usually these projectors can not be used in environments with bright light. Cheap SMPs can not produce a larger picture, but you can get a decent 34 to 130 inches image in a fairly dark environment. The picture isn't too bright, so you can get the best output in a dark room. Hence think of your requirement before buying one. It would suit well for home cinema. For doing a presentation in a fairly dark room, well it will yet do a reasonable job. But beyond that, you will have to go for an expensive projector.

|

| UC40 and RD805 projectors |

After doing few weeks of reading on internet, I finally ordered Unic UC40 model. My budget was around 80 USD, and UC40 was the best option to go as per some reviews. Alternatively GM 60 (another reference) and RD 805 seemed to be a good option too. Later I ordered RD805 for one of my friends. It was bit hard task to compare between models as there were not much reviews on internet on these projectors.

Most of SMPs produce 1000 lumens brightness and 1:1000 contrast ratio. They have 800x450 pixel resolution, well those specs are adequate for the price you paying.

I will bring a comparative review of UC40 and RD805 in my next post.

Friday, August 5, 2016

Integrating Bit Bucket with Sonar

In connection with my previous post on generating a sonar violations report by email, this post is about how I integrated BitBucket with Sonar.

This integration generated comments indicating sonar violations on file changes within a pull request. It will enable the visibility of violations being introduced from code within a GIT feature branch, at the time of pull request to merge the branch with the master. It will further make the life of peer reviewer a lot easy.

A free add-on to Bit Bucket called 'Sonar for Bitbucket Cloud' together with Bitbucket plugin for SonarQube were used for the integration. The integration would do followings.

Now, create a file sonar.json in the root of code trunk folder and set configuration values. To figure out the project key, you can refer to the SonarQube dashboard or the sonar settings file used for your sonar analysis.

{

"sonarHost": "",

"sonarProjectKey": ""

}

/extensions/plugins directory and restart the sonar server.

A seperate sonar settings file was created (I named it as sonar-project-bitbucket.properties) to include additional properties for this build. You can get an good overview of Bitbucket plugin sonar properties from their web page. It will set sonar analysis mode to 'issues', you wont be able to see the analysis results being published to sonar dashboard. Do not get confused looking at the sonar dashboard.

# .. same values on other settings as existing sonar-project.properties file

#bitbucket plugin

sonar.bitbucket.repoSlug=

sonar.bitbucket.accountName=

sonar.bitbucket.teamName=

sonar.bitbucket.apiKey=

sonar.bitbucket.oauthClientKey=

sonar.bitbucket.oauthClientSecret=

#sonar.bitbucket.branchName=${GIT_BRANCH} --> This property added as a command line argument in jenkins build

sonar.host.url=

sonar.analysis.mode=issues

In order to generate comments on pull requests from a branch, you need to analyse the code of the relevant branch. Hence it will be a good idea to go with a parameterized Jenkins build where you can input on which branch to checkout in Jenkins. Here, GIT branch name will be taken from a Jenkins build parameter (which will be available as an environment variable) since it does not work when included it inside sonar-properties file. It has to be provided as an seperate argument within Jenkins build itself.

There is a GIT Parameter Plugin for Jenkins through which the same can be done, but it did not work well for me.

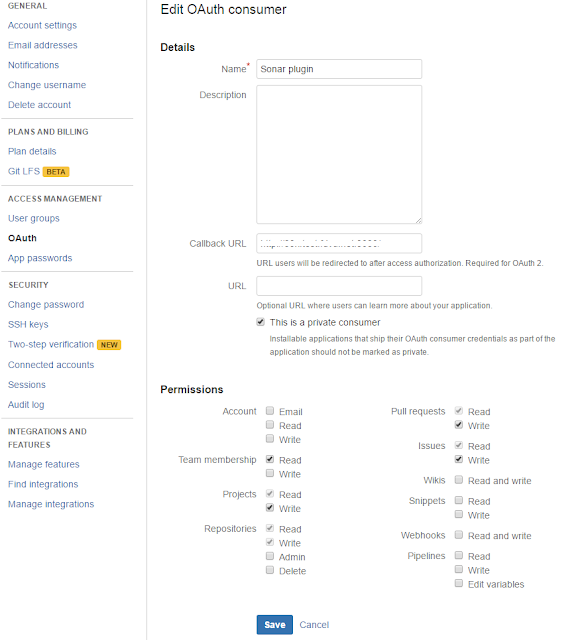

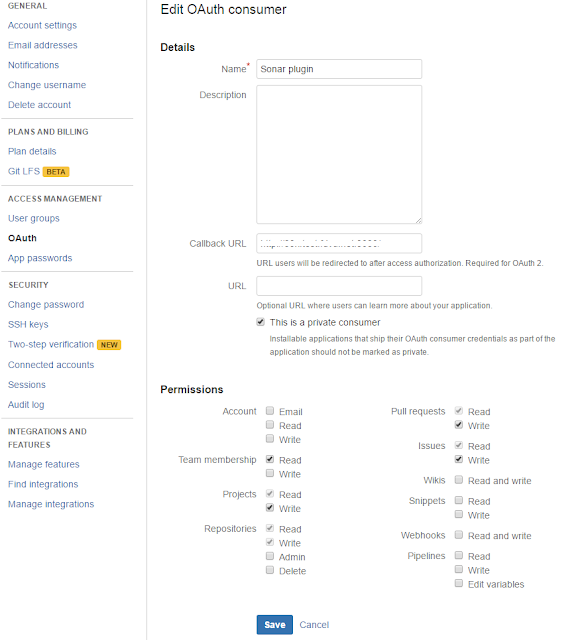

To generate an OauthClient key, go to settings of your Bitbucket account, go to OAuth from the left menu, and click on add oauth consumer button. A sample settings of an OAuth consumer is shown below. Make sure you fill the 'Call back URL' field; otherwise it will not work. I just put my Jenkins URL as the callback URL (It does not matter, you can use any URL; may be sonar server URL).

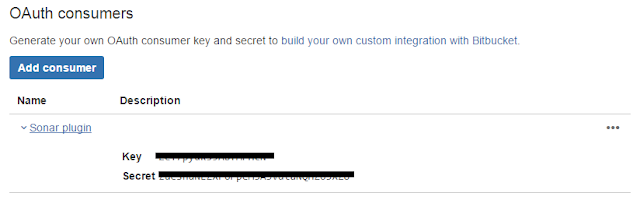

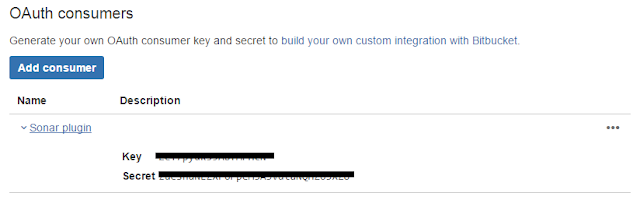

After creating OAuth consumer, click on it to get the oauthClientKey oauthClientSecret.

We have used SonarQube plugin for Jenkins. Since we had to configure a parameterized job, we considered GIT Parameter Plugin, but it did not work well for us. Hence a string parameterized build was configured as below.

In order to enable sonar-project-bitbucket.properties, following two commands to be executed as a windows command execution build setp, before triggering sonar analysis. I tried specifying sonar-project-bitbucket.properties file in 'Path to project properties' field of Sonar Scan task configuration, but it did not work for me. Hence I had to do this work around.

del sonar-project.properties

copy sonar-project-bitbucket.properties sonar-project.properties

Following is how sonar scanner build step configured. Sonar scanner installation has been configured via manage Jenkins.

This integration generated comments indicating sonar violations on file changes within a pull request. It will enable the visibility of violations being introduced from code within a GIT feature branch, at the time of pull request to merge the branch with the master. It will further make the life of peer reviewer a lot easy.

A free add-on to Bit Bucket called 'Sonar for Bitbucket Cloud' together with Bitbucket plugin for SonarQube were used for the integration. The integration would do followings.

- Shows all relevant SonarQube statistics for a Bitbucket repository like test coverage, technical debt, code duplication, found code issues on Bitbucket's overview page.

- Generate pull request comments for found code issues

Installing and Configuring Sonar for Bitbucket Cloud Plugin

Installing the Bitbucket plugin on Bitbucket is pretty straight forward. You need the Bitbucket cloud version for this. Login from which account (team/personal) you want to configure, visit https://marketplace.atlassian.com/plugins/ch.mibex.bitbucket.sonar/cloud/overview and press 'Get it now' button to install the free plugin.Now, create a file sonar.json in the root of code trunk folder and set configuration values. To figure out the project key, you can refer to the SonarQube dashboard or the sonar settings file used for your sonar analysis.

{

"sonarHost": "

"sonarProjectKey": "

}

Installing and Configuring Bitbucket Plugin for SonarQube

Please follow the instructions at https://github.com/mibexsoftware/sonar-bitbucket-plugin carefully. Download the plugin from the release page, place it insideA seperate sonar settings file was created (I named it as sonar-project-bitbucket.properties) to include additional properties for this build. You can get an good overview of Bitbucket plugin sonar properties from their web page. It will set sonar analysis mode to 'issues', you wont be able to see the analysis results being published to sonar dashboard. Do not get confused looking at the sonar dashboard.

# .. same values on other settings as existing sonar-project.properties file

#bitbucket plugin

sonar.bitbucket.repoSlug=

sonar.bitbucket.accountName=

sonar.bitbucket.teamName=

sonar.bitbucket.apiKey=

sonar.bitbucket.oauthClientKey=

sonar.bitbucket.oauthClientSecret=

#sonar.bitbucket.branchName=${GIT_BRANCH} --> This property added as a command line argument in jenkins build

sonar.host.url=

sonar.analysis.mode=issues

In order to generate comments on pull requests from a branch, you need to analyse the code of the relevant branch. Hence it will be a good idea to go with a parameterized Jenkins build where you can input on which branch to checkout in Jenkins. Here, GIT branch name will be taken from a Jenkins build parameter (which will be available as an environment variable) since it does not work when included it inside sonar-properties file. It has to be provided as an seperate argument within Jenkins build itself.

There is a GIT Parameter Plugin for Jenkins through which the same can be done, but it did not work well for me.

To generate an OauthClient key, go to settings of your Bitbucket account, go to OAuth from the left menu, and click on add oauth consumer button. A sample settings of an OAuth consumer is shown below. Make sure you fill the 'Call back URL' field; otherwise it will not work. I just put my Jenkins URL as the callback URL (It does not matter, you can use any URL; may be sonar server URL).

After creating OAuth consumer, click on it to get the oauthClientKey oauthClientSecret.

Jenkins Configuration

We have used SonarQube plugin for Jenkins. Since we had to configure a parameterized job, we considered GIT Parameter Plugin, but it did not work well for us. Hence a string parameterized build was configured as below.In order to enable sonar-project-bitbucket.properties, following two commands to be executed as a windows command execution build setp, before triggering sonar analysis. I tried specifying sonar-project-bitbucket.properties file in 'Path to project properties' field of Sonar Scan task configuration, but it did not work for me. Hence I had to do this work around.

del sonar-project.properties

copy sonar-project-bitbucket.properties sonar-project.properties

Following is how sonar scanner build step configured. Sonar scanner installation has been configured via manage Jenkins.

How to Use

Below is how use the setup to generate comments on Bitbucket pull requests.- After finishing up on work in a branch, create a pull request for branch to merge into the master.

- Trigger parameterized Jenkins build specifying the branch name to build.

- Comments of sonar violations will be appeared in the pull request itself!

Output

Generated comments in Bitbucket will look like as below.

Labels:

BitBucket,

Code Analysis,

Email,

Integration,

Jenkins,

Reporting,

SonarQube

Wednesday, August 3, 2016

Generating Sonar violation report email from Jenkins

In my current project, we manage stories in Jira and commit our code to a GIT repo at BitBucket. We have configured Jenkins for continuous integration pruposes. Recently we installed a SonarQube instance for static code analysis. Sonar helped us to identify issues in codebase a lot.

We had a Jira tasks workflow of To-do -> In progress -> Code review -> PO review -> Done. Once development is finished, we used to move stories to Code review state, where the code will be reviewed, and feature will be tested by a peer before making it to PO review status.Although we have configured sonar, it was not easy to directly identify the new violations being created by committed code.

We tried two approaches to make the developers lives easy by making the new violations visible. First approach was to generate an daily email report of new sonar violations created by each developer. The second approach was an integration of SonarQube and BitBucket, which I will describe in a separate post.

Having configured sonar server http://localhost:9000/ (lets say), following is how we made the daily email report of sonar vialoations.

The daily sonar violations email report will be generated to show new violations created based on the new code being added/ existing code modified by commits during the day. A jenkins job was used to trigger the analysis at 11.00 pm nightly. It is important to make sure that jenkins job completed before the mid night of the day since the report generation happens at the end of the jenkins job. If the report generation falls into the very beginning of following day, an empty report will result.

After installing plugins to to Manage Jenkins → Configure System, and do the following configurations.

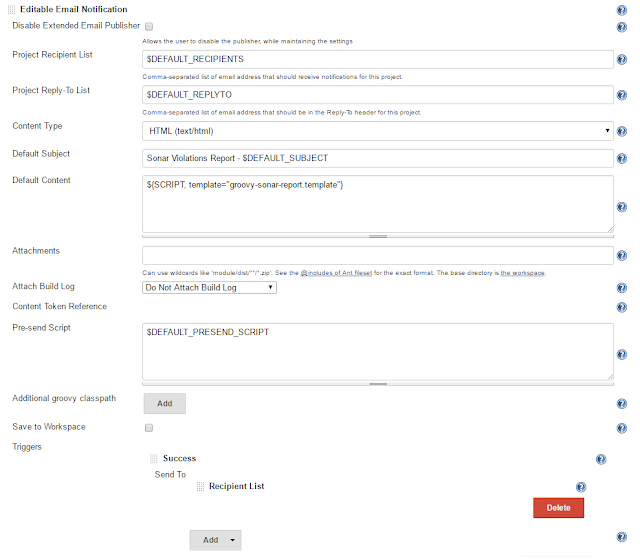

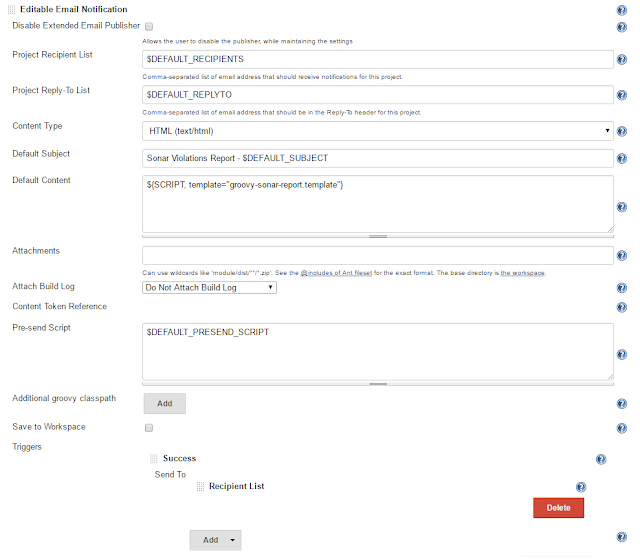

In your jenkins job configuration, dd post-build setp 'Editable Email Notification' and configure it as follows.

And the final email output was as follows :). Sorry I had to erase out few content lines from the screenshot. I had few styling issues when viewing it in Outlook 2013. Please see the comment of mine at https://gist.github.com/isurusndr/dd6dc9fd9768f57eb93e6cdad9af7cf3 to find out how I solved it.

We had a Jira tasks workflow of To-do -> In progress -> Code review -> PO review -> Done. Once development is finished, we used to move stories to Code review state, where the code will be reviewed, and feature will be tested by a peer before making it to PO review status.Although we have configured sonar, it was not easy to directly identify the new violations being created by committed code.

We tried two approaches to make the developers lives easy by making the new violations visible. First approach was to generate an daily email report of new sonar violations created by each developer. The second approach was an integration of SonarQube and BitBucket, which I will describe in a separate post.

Having configured sonar server http://localhost:9000/ (lets say), following is how we made the daily email report of sonar vialoations.

The daily sonar violations email report will be generated to show new violations created based on the new code being added/ existing code modified by commits during the day. A jenkins job was used to trigger the analysis at 11.00 pm nightly. It is important to make sure that jenkins job completed before the mid night of the day since the report generation happens at the end of the jenkins job. If the report generation falls into the very beginning of following day, an empty report will result.

Sonar Configuration

SonarQube server version used was v5.4 with no password protection. Disabling authorized access which is the default configuration of sonar server, is important to access sonar api by the script template.Jenkins Configuration

Jenkins version 1.6 was used to trigger a nightly sonar analysis. Below jenkins plugins were installed and configured on the jenkins instance.- SonarQube plugin: an integration of SonarQube into Jenkins

- Email Extension plugin: a replacement to jenkin's default email publisher. Provides some advanced email features.

- Groovy plugin: enable executing goorvy code.

After installing plugins to to Manage Jenkins → Configure System, and do the following configurations.

- Under SonarQube servers section, input name of your choise, server URL of sonar server instance (e.g. http://localhost:9000) and select the correct server version (e.g. '5.3 or higher' in our case)

- Under Global Properties section, enable environment variables, and add name/value pair of 'SONAR_PROJECT_KEY'/ your sonar key of the analysis which you have configured in sonar analysis properties. (i.e. the value sonar.projectKey property in sonar arguments) You can easily find out sonar key from sonar project dashboard.

- Under Groovy section, add a groovy installation. Set GROOVY_HOME to the \bin location of the grrovy installation folder in your machine. (e.g. C:\groovy-2.4.6\bin)

- In SonarQube Scanner section, add an SonarQube scanner installation. Set a name and SONAR_RUNNER_HOME the path of sonar scanner installation on your machine. (e.g. C:/SonarQube/sonarqube-5.4/sonar-scanner-2.6)

- In Jenkins Location section, fill the URL of jenkins instance, and set an email address as the System Admin e-mail address.

- Set the SMTP email server settings under Email Notification section. You may even configure to use your gmail account here.

- Under Extended Email Notification, set your email at Default Recipients. You may set 'Default Subject' something like "$PROJECT_NAME - Build # $BUILD_NUMBER - $BUILD_STATUS!" A sample Default Content would be as follows.

$PROJECT_NAME - Build # $BUILD_NUMBER - $BUILD_STATUS:

Check console output at $BUILD_URL to view the results.

//jenkins

Then configure a Jenkins build job for the project. Add build setp 'Execute SonarQube Scanner' in jenkins job configuration page. Select correct SonarQube Scanner from the dropdown. You may keep other fields blank/default values there.

You may schedule the job before 12 mid-night as follows (between 11.00 pm to 11.15 pm in my example).

With the Email Extension Plugin of Jenkins, it is possible to generate HTML emails based on a Grrovy templates. When I was searching for a similar work, I found an interesting groovy template script found at https://gist.github.com/x-cray/2828324. The script was developed for an earlier version of SonarQube server. But, by SonarQube version 5.0 the API has been changed a lot. (Newest API documentation can be found at https://sonarqube.com/web_api/api/sources ) Hence I had to upgrade the script to work with sonar v5. The upgraded script can be found at https://gist.github.com/isurusndr/dd6dc9fd9768f57eb93e6cdad9af7cf3.

Configure another jenkins job and schedule it to run after 1-2 hours from the sonar analysis job configured above step. Just add steps to build the project, because we will be using the job just to send the email report. It is important to have another job to send the sonar report, because sonar takes some time to persist the data into its database after sonar analysis. Hence, if you try to send the email within the same job above, email will always report no violations.

Copy the latest script into a file and save it as groovy-sonar-report.template. Place it inside the email templates folder of jenkins installation.\email-templates. You can also test the email template in jenkins interface. Just input the email template name (e.g. groovy-sonar-report.template) and select a build to run against.

You may schedule the job before 12 mid-night as follows (between 11.00 pm to 11.15 pm in my example).

Configure another jenkins job and schedule it to run after 1-2 hours from the sonar analysis job configured above step. Just add steps to build the project, because we will be using the job just to send the email report. It is important to have another job to send the sonar report, because sonar takes some time to persist the data into its database after sonar analysis. Hence, if you try to send the email within the same job above, email will always report no violations.

Copy the latest script into a file and save it as groovy-sonar-report.template. Place it inside the email templates folder of jenkins installation.

In your jenkins job configuration, dd post-build setp 'Editable Email Notification' and configure it as follows.

And the final email output was as follows :). Sorry I had to erase out few content lines from the screenshot. I had few styling issues when viewing it in Outlook 2013. Please see the comment of mine at https://gist.github.com/isurusndr/dd6dc9fd9768f57eb93e6cdad9af7cf3 to find out how I solved it.

Labels:

Code Analysis,

Email,

Jenkins,

Reporting,

SonarQube

Tuesday, April 26, 2016

Upgrading SonarQube From v4.5 to v5.4

Few days back I upgrade the SonarQube instance we used for one of our projects from version 4.5 to version 5.4. We run it on a dedicated server using MsSQL as the back end database. We also have a Jenkins scheduled job to trigger code analysis overnight.

The sonar upgrade instructions can be found at http://docs.sonarqube.org/display/SONAR/Upgrading was very helpful. Read it carefully before you start upgrading.

The most important task before you do the upgrade, is to back-up the database. Instead of creating a database back-up, I just duplicated the existing database.

I used the copy database wizard of Microsoft SQL Server Management Studio. The wizard is straight forward to use. In the screen to select the transfer method, I selected 'Use SQL Management Object Method' since my database was not so large. I tried 'Use the detach and attach method', but it gave some errors during database copy.

After database copy, I downloaded SonarQube 5.4 at http://www.sonarqube.org/downloads/ followed the upgrade instructions.

After updating sonar.properties in the SonarQube v5.4 installation, I tried starting the sonar and got the following error.

WrapperSimpleApp: Encountered an error running main: org.sonar.process.MessageException: Unsupported JDBC driver provider: jtds

org.sonar.process.MessageException: Unsupported JDBC driver provider: jtds

When I inspected the sonar.properties, I found the issue with the connectionstring. My original connectionstring looked like this:

sonar.jdbc.url=jdbc:jtds:sqlserver://localhost:1433/SonarQube;SelectMethod=Cursor

The new one should be (note the changes from previous connectionstring):

sonar.jdbc.url=jdbc:sqlserver://localhost;databaseName=SonarQube

After fixing the connection string, SonarQube started without issues. Then I upgraded the Sonar Runner (now called Sonar Scanner) installation on the Jenkins node with Sonar Scanner 2.6 which can be downloaded at http://docs.sonarqube.org/display/SCAN/Analyzing+with+SonarQube+Scanner. Changing the sonar-scanner.properties in the new Sonar Scanner from previous Sonar Runner installation was straight forward. Note that the connection string format remains the same from previous version (unlike in SonarQube sonar.properties).

As the final step, I upgraded the SonarQube Scanner for Jenkins plugin. The new jenkins plugin have some extra configurations to be made than the previous plugin. Have a look at http://docs.sonarqube.org/display/SCAN/Analyzing+with+SonarQube+Scanner+for+Jenkins

I found SonarQube v5.4 dashboard looks exciting than the previous version. Furthermore there is one big enhancement in this new version which has impact on the integration with Visual Studio Online: no direct database access is necessary anymore.

The sonar upgrade instructions can be found at http://docs.sonarqube.org/display/SONAR/Upgrading was very helpful. Read it carefully before you start upgrading.

The most important task before you do the upgrade, is to back-up the database. Instead of creating a database back-up, I just duplicated the existing database.

I used the copy database wizard of Microsoft SQL Server Management Studio. The wizard is straight forward to use. In the screen to select the transfer method, I selected 'Use SQL Management Object Method' since my database was not so large. I tried 'Use the detach and attach method', but it gave some errors during database copy.

After database copy, I downloaded SonarQube 5.4 at http://www.sonarqube.org/downloads/ followed the upgrade instructions.

After updating sonar.properties in the SonarQube v5.4 installation, I tried starting the sonar and got the following error.

WrapperSimpleApp: Encountered an error running main: org.sonar.process.MessageException: Unsupported JDBC driver provider: jtds

org.sonar.process.MessageException: Unsupported JDBC driver provider: jtds

When I inspected the sonar.properties, I found the issue with the connectionstring. My original connectionstring looked like this:

sonar.jdbc.url=jdbc:jtds:sqlserver://localhost:1433/SonarQube;SelectMethod=Cursor

The new one should be (note the changes from previous connectionstring):

sonar.jdbc.url=jdbc:sqlserver://localhost;databaseName=SonarQube

After fixing the connection string, SonarQube started without issues. Then I upgraded the Sonar Runner (now called Sonar Scanner) installation on the Jenkins node with Sonar Scanner 2.6 which can be downloaded at http://docs.sonarqube.org/display/SCAN/Analyzing+with+SonarQube+Scanner. Changing the sonar-scanner.properties in the new Sonar Scanner from previous Sonar Runner installation was straight forward. Note that the connection string format remains the same from previous version (unlike in SonarQube sonar.properties).

As the final step, I upgraded the SonarQube Scanner for Jenkins plugin. The new jenkins plugin have some extra configurations to be made than the previous plugin. Have a look at http://docs.sonarqube.org/display/SCAN/Analyzing+with+SonarQube+Scanner+for+Jenkins

I found SonarQube v5.4 dashboard looks exciting than the previous version. Furthermore there is one big enhancement in this new version which has impact on the integration with Visual Studio Online: no direct database access is necessary anymore.

Labels:

Automation,

Code Analysis,

Jenkins,

Sonar,

SonarQube,

Upgrade

Subscribe to:

Comments (Atom)